Meta Adds Assistance for Vision Impaired Users in Ray Ban AI Glasses

Meta has added a new accessibility element to its Ray Ban Meta glasses, with vision-impaired users now able to call on volunteers for assistance with tasks when wearing the device.

Which could expand the usage potential for Meta sunglasses, while also potentially opening up a whole new opportunity for services-based businesses to be able to cater to customer/user requests be accessing the cameras on the device.

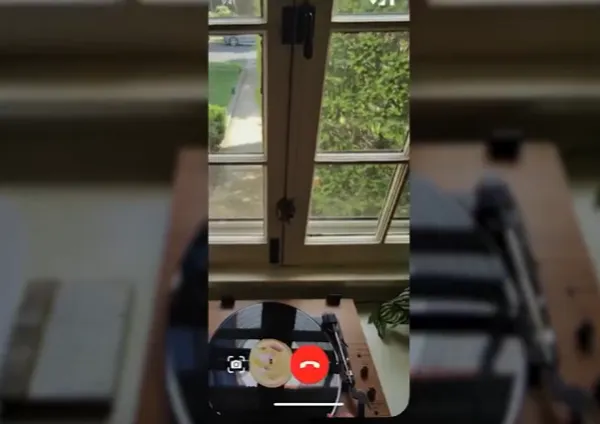

As you can see in the above examples, now, when a user is wearing their Ray Ban Meta glasses (and has connected “Be My Eyes” app), they’ll be able to say: “Hey Meta, call be my eyes,” and ask for assistance with a task. That will then open up a new video call window, which will enable a volunteer to see what you’re seeing, and provide advice to help with your problem.

Which has immediate value for vision-impaired users, but could also relate to any user, and being able to facilitate direct connection and advice, by tapping into their field of view.

Services businesses could use this as a means to help solve problems, or as a value-add to quickly assess and quote on potential issues.

Which could further expand the market potential for the device, with Meta’s Ray Bans are already selling well, and becoming a key companion for AI-enabled interaction.

Indeed, Meta’s now calling them “AI glasses” as opposed to “smart glasses” as it looks to put more emphasis on its assistive elements.

Meta’s also planning to launch a new version of the device later this year, while it continues to work on its fully AR-enabled glasses, which are scheduled for release in 2027.

Expanded use cases like this take advantage of that connectivity, and could make Meta’s AI glasses a more valuable utility for many users.

An interesting expansion either way, which opens up more considerations for Meta’s future direction.

Source link